Project CooperativeCritters

The CooperativeCritters (CC) project aimed at studying the emergent properties and dynamics of evolutionary and genetic algorithms (GA) in the context of social behavior. The emphasis is on the co-evolution of traits and behaviors of all individuals. If we no longer define fitness as something that can ascribed to a single individual, but rather a group of individuals, what kind of solutions can we expect to see emerge in a population of such evolving agents? This project is still in its infancy, but the basic structure, design and approach is described below. Demos of actual simulation are forthcoming!

Background

Traditionally, GE algorithms can provide solutions to problems that are intractable or computationally expensive to classical approaches of fitting and optimization. Central to GA is the creation of a large population of individuals or agents. Each of these individuals start with the basic structure of the computations that are allowed, as well as random or best-estimate values for the parameters used in this computation. For example, in estimating a quadratic function of form y = a2 * 2b + c, all agents can be given the same function form, but each individual has their own unique set of initial parameters (e.g. a=2, b=1, c=0.5). This allows each individual agent to compute a predicted output y on some input x. The error between predicted and some observed ground truth data then defines the fitness: the smaller the error between predicted and ground truth, the fitter the induvial agent. After determining the fitness of each agent, some subset of the agents with the highest fitness are selected to reproduce. Reproduction can be done in many different ways, but always should produce offspring (new agents) that inherit 'traits' from both parent agents. For example, in our earlier example, the offspring Z of parents X and Y might inherit the value of a from parent X, the value for parameter b from parent Y, while variable could be the average of the two parents. In addition, we might opt for some random mutations, where inherited parameter values are adjusted, perhaps by adding noise, or some non-linear transformation. Under the right circumstances and right evolutionary space (all solutions to parameters a, b and c that be realized through the process of reproducing and mutation), the population of agents will eventually evolve towards an optimum, where the parameters across the population accurately describe the best fitting function to our data. This approach has been shown to find solutions faster or even at all, compared to traditional methods. It efficiently explores the solution space. Individuals might get stuck in a local minimum, but this means they either 'perish' or that their offspring inherits only part of the local minimum and therefor has an option to escape the local minimum. However, these agents and the algorithms that their genetic code express live in a harsh 'every agent for itself' world: only the fittest survive and recombine to produce a subsequent generation. To make matters even worse, such an approach use the strict Darwinian definition of evolution once born, traits have been inherited from the parents, making an individual fit to survive (or not) from day 1. This initial fitness determines the eventual outcome: whether it leads to a next generation. Nothing the agent itself might experiences has any effect on this initial genetic blueprint and fitness. However, it has been known for some time that this is an incorrect and incomplete theory. Experimental research has shown that the genetic code in humans and animals can be influenced by (drastic) changes in the environment, or better: if and how they are expressed. This field, called epigenetics, is still maturing, but seems to be the much-needed environmental influence on an individual that also determines future success. And in social animals, like us, our environment is full of one particular thing exerting influence on us: our kin. So, with this thinking, I started work on CooperativeCritters, to look at the dynamics of a population of agents sharing an environment. Here, the emphasis is on the co-evolution of traits and behaviors of all individuals. If we no longer define fitness as something that can ascribed to a single individual, but rather a group of individuals, what kind of solutions can we expect to see emerge in a population of such evolving agents? This project is still in its infancy, but the basic structure, design and approach is described below. Demos of actual simulation are forthcoming!

Altruism over Individualism

However, these agents and the algorithms that their genetic code express live in a harsh 'every agent for itself' world: only the fittest survive and recombine to produce a subsequent generation. To make matters even worse, such an approach use the strict Darwinian definition of evolution once born, traits have been inherited from the parents, making an individual fit to survive (or not) from day 1. This initial fitness determines the eventual outcome: whether it leads to a next generation. Nothing the agent itself might experiences has any effect on this initial genetic blueprint and fitness.

World

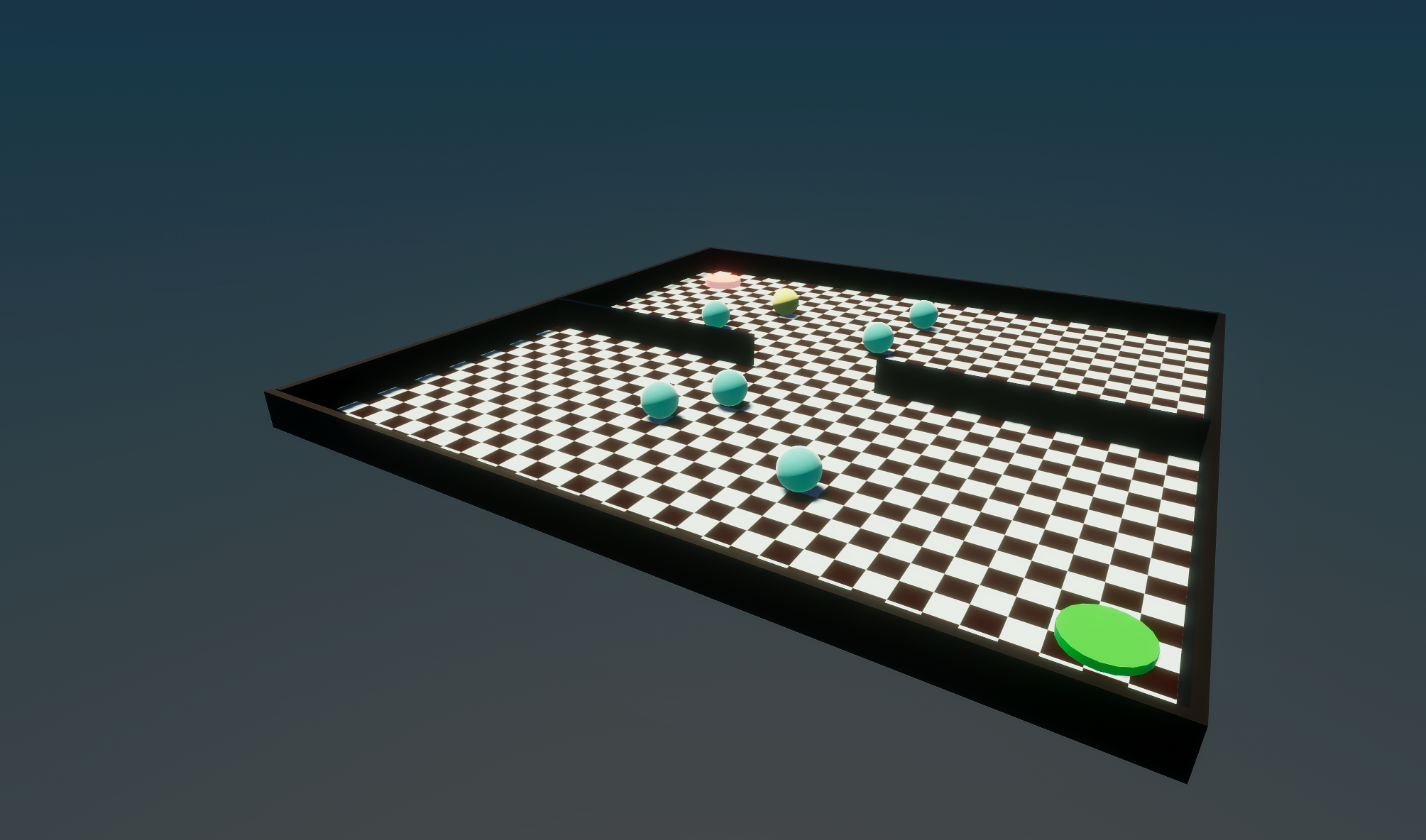

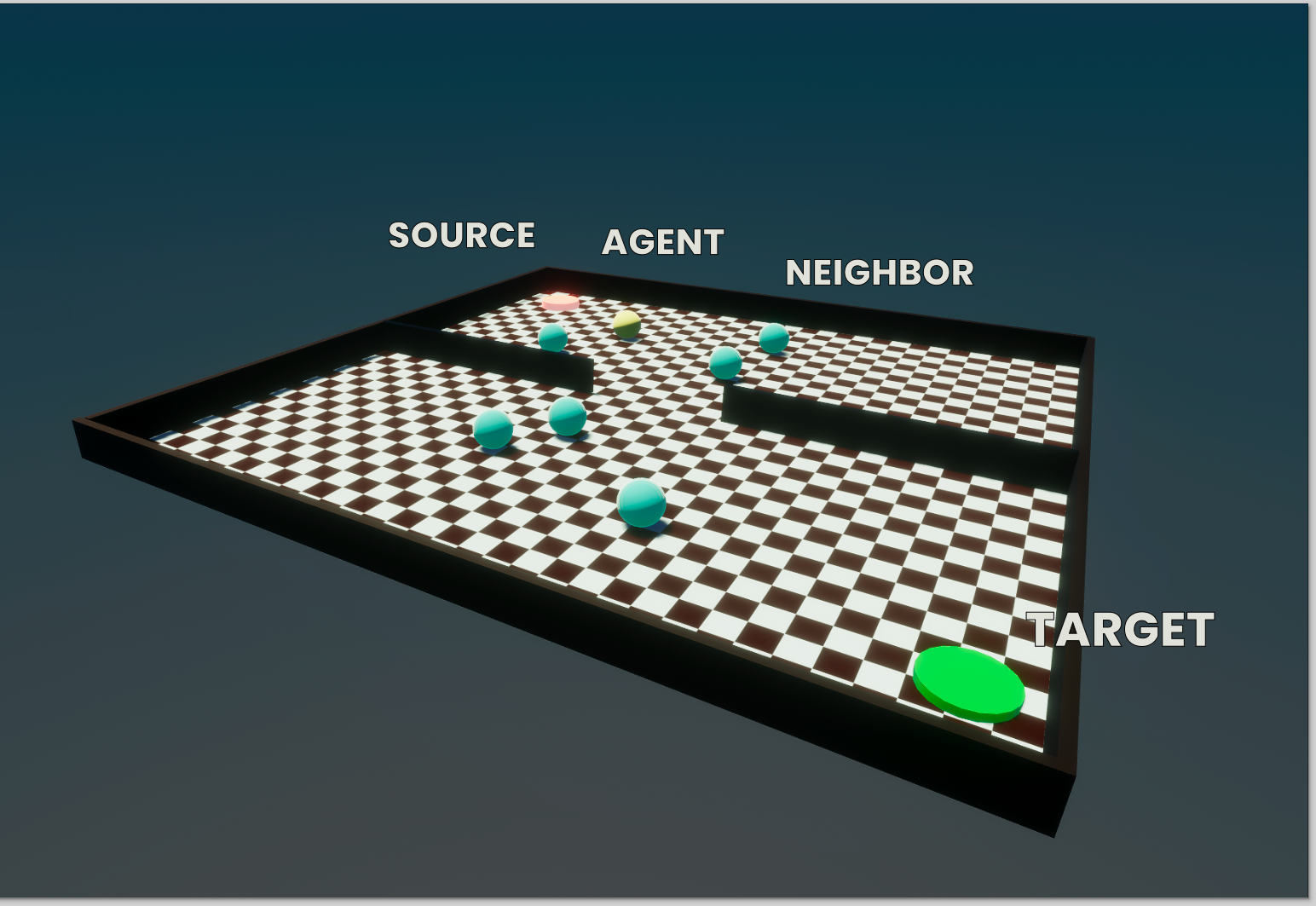

The complexity of the world within CC is low. For now, it is a simple two-dimensional plane with fixed outer boundaries. All agents are presented by a simple rigid body sphere, obeying the laws of Newtonian physics. Gravity will have all agents at rest at the surface of the plane, on which they can move in any direction. However, by virtue of being rigid bodies, they can't go through the outer boundaries of their world. Neither can they go through objects placed within this world, including other agents.

Agent Input

Agents receives inputs encoded as vectors, where each vector represent some quantity observed by its imaginary senses. First, it can be given a 2D vector representing the location of some target destination relative to the location of the agent itself. Note that this does not necessarily have to be absolute ground truth vector. Additional parameters can be adjusted to affect the distance at which the target can even be sensed (if the agent is too far, the vector pointing from the agent to its target but simply be null). When the target location us not observable or unknown to the agent, it can use a second set of inputs: the vectors between the agent and all other agents it can observe within some range. This allows each agent to use a derivative approximation of the target location. For example, if the agent assumes all other agents (itself included) are moving towards the same target location, then the average vector from the agent to all its neighbors can be used as a proxy of the target location, which some degree of certainty.

Agent Output

The agent only has one actuator action as output, its velocity vector: the direction and speed in which it will move next.

Agent State

The agents state evolves over time with some level of predictiveness, and therefore retaining these states internally can be advantages. The 'state' of each agent can be representing by some aggregation or quantification of the previous values computed in the transformation between inputs and outputs. As such, reintroducing these during the set of computation thus serves as a form of memory.

Agent Computations and Transformations

Internally, the agents uses a multi-perceptron approach to transform both the inputs and internal states from the previous computations into a new agent output: some change in direction, sped and acceleration). The number of hidden layers and nodes per hidden layer can be variable, depending on the available metabolic resources the agent has allocated to it (see below).

Collective and Individual Levels of fitness

Fitness is computed after some fixed amount of iterations after the agents were places at the own starting location. This is computed for each individual agent (how close it got to the target location B) and for the group (the number of individuals in the group that were successful in reaching target location B, relative to the group size). By adjusting how each of these two fitness metrics is weighted to produce the agents final true fitness, we can effectively vary the degree to which an individual is purely egoistic (the only thing that matter is reach the goal itself) to purely altruistic: despite not even reaching the target location itself, it is still considered to be extremely fit, as long as many other agents were able to make it).